A friend saw my 4-letter word display, and asked me to make one for him. It seemed like a bit of a challenge so I agreed.

I went back to the spelling dictionary, and extracted all words with 5 to eight letters - resulting in 76, 211 words. Next, I computed word adjaciencies by "edit distance": you are allowed to change a letter, insert a letter, or delete a letter to go from one word to another. I repeated the analysis I made with the 4-letter word generator. First I deleted all words with less than two other adjacent words. I repeated this process eight times, and wound up with 39, 948 words. Next, I computed the connected components, and found 1381 of them. Fortunately, the largest component has 33590 words. Finally, I computed the biconnected components on the largest connected components, and found 796 biconnected components, of which the largest contains 30129 words and 188868 adjacencies. I use this component for the random walk.

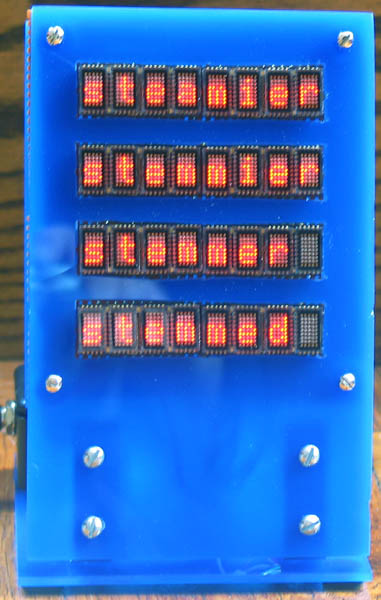

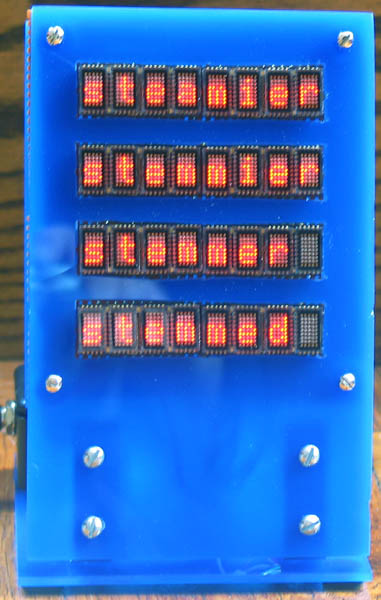

The display is built in pretty much the same way as the 4-letter word is. One complication is that the data set size is much larger. While the 4-letter word data fit into 1 Mbit of space, the 8-letter word data requires something closer to 1 Mbyte of data. Further, I couldn't find serial eeproms in that size. Fortunately, serial flash memory is available in large sizes. I wound up using two 512Kbtye Atmel at45db041b serial flash chips.

Now for some statistics. The distribution of the number of adjacencies is:

| # adjacent | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| # words | 4553 | 4753 | 4144 | 3373 | 2649 | 2123 | 1675 | 1355 |

| # adjacent | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| # words | 1107 | 817 | 757 | 562 | 475 | 378 | 323 | 257 |

| # adjacent | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| # words | 201 | 165 | 106 | 92 | 61 | 55 | 32 | 28 |

| # adjacent | 26 | 27 | 28 | 29 | 30 | 31 | 32 | 33 |

| # words | 29 | 18 | 15 | 6 | 2 | 8 | 3 | 3 |

| # adjacent | 34 | 35 | 36 | 37 | 38 | 39 | 40 | 41 |

| # words | 2 | 2 | 2 | 0 | 0 | 0 | 0 | 1 |

The most connected words are:

| cares | 41 |

| pares | 36 |

| cores | 36 |

| rater | 35 |

| mines | 35 |

| lines | 34 |

| hales | 34 |

| mates | 33 |

| pales | 33 |

| wines | 33 |

| canes | 32 |

| later | 32 |

| bater | 32 |

| cases | 31 |

| cates | 31 |

| hares | 31 |

| fares | 31 |

| cones | 31 |

| pater | 31 |

| pates | 31 |

| bares | 31 |

Now that we have two examples of random walks on words, lets make some comparisons. First of all, it is evident that the four letter words are much denser and more connected than the five-to-eight letter words. In the original (unprocessed) 4-letter word graph, there are 4776 words and 50,719 adjacencies, or about 10.6 adjacencies per word. In the 8-letter unprocessed 8-letter word graph, there are 76,211 words and 244,583 adjaciencies, or about 3.2 adjacencies per word. So, 4-letter words are three times more connected than 5-to-eight letter words. Also, the most connected words above are all five letter words. The connectivity is related to the density of the words. There are about 264=456,976 possible four letter words (ignoring the occasional punctuation), so about one in 96 random 4-letter words are in the dictionary. There are about 265(1+26+262+263)=217,179,671,904 possible five to eight letter words, so only one in about 2,850,000 are in the dictionary.

The greater connectivity of the four letter words shows in the size of the final component we use for the random walk. I use 92% of the four letter words for the random walk, but only 40% of the five to eight letter words. This kind of behavior is predicted by the Giant Component theorem, due to Erdos and Renyi. They consider the number and size of connected components of a large "random graph" - one whose adjacencies are chosen randomly. They found that if the average number of adjacencies of a vertex, c, is larger than one (i.e., c>1) then the graph will have a single "giant (connected) component" with f(c)*N vertices, where N is the number of vertices and f(c) is a constant which depends on c. Further, all other connected components will be small (having no more than about Log(N) vertices). Generating an interesting random walk through words depends on the giant component, because otherwise I wouldn't be able to find many words through which to take an interesting walk.

Erdos and Renyi also produced the following formula for f(c):

f(c) = 1-( Sk=1

.. infinity(kk-1(ce-c)k)/k! )/c

How well does this formula predict the size of the largest connected component? In the case of the four letter word, f(10.6)=100%, and the largest connected component contains 99.7% of the vertices. But for the five-to-eight letter words, f(3.2)=95.3%, but the largest connected component contains only 75% of the vertices. Since the Erdos-Renyi formula consistently over-predicts the size of the giant component, it would seem that the word graphs are not that "random".

We can see that the process which generates the word graphs is not the same as the Erdos-Renyi random graphs. The E-R random graphs are a collection of vertices, with randomly chosen adjacencies. The word graphs are generated in a very different way. Each possible "word"of letters is adjacent to a specific set of other "words" - those which are one edit apart. The actual word graph is created by choosing a particular collection of words. The words are not randomly chosen, either. In the case of four letter words, each word has about 4*25=100 neighbors. Since one in 96 of the possible words are in the graph, each vertes should have about 100/96=1.04 neighbors, much less than the 10.6 that it actually has. For the five-to-eight letter words, each word has about 8+26*8+25*8=3,264 neighbors. Since only one in 2,850,000 potential words are in the dictionary, each word should have about .001 neighbors, very much less than the actual 3.2

A last question to ask is, which words appear most often in a random walk? A random walk of the kind we make on the words is modeled by a Markov chain. During a random walk in a Markov chain, at any point in time you are at a particular vertex. For the next time period, you randomly walk to one of the adjacent vertices, with a probability chosen by the "weight" of the adjacency. Over a very long period of time, the average frequency of visiting a particular node approaches a limiting distribution. There are several methods for finding this limiting distribution. If you write down all of the transition probabilities in the random walk into a matrix, you can try to solve a system of linear equations (specifically, looking for the eigenvector corresponding to eigenvalue 1). Or, you can solve the equation iteratively, by starting with a proabbility distribution vector and repeatedly multiplying this vector by the Markov chain matrix until there is little change in the vector from iteration to iteration. (This is essentially the "PageRank" algorithm which Google claims to use: the Web is modeled as a big graph, and page links determine adjacencies. The most "important" pages are those with the greatest frequency of visits in a random walk of the WWW).

Another way to find the limiting distribution is to look at the governing equations and figure out an answer. Since the graph is symmetric, and each neighboring word is equally likely to chosen as the next word, the frequency of visiting a word is directly proportional to the number of its adjacent words. That is, 'cares', with 41 neighbors, occurs more than 20 times as often in a random walk as does 'abash', which has two neighbors.